/1000 Pages with Redirects

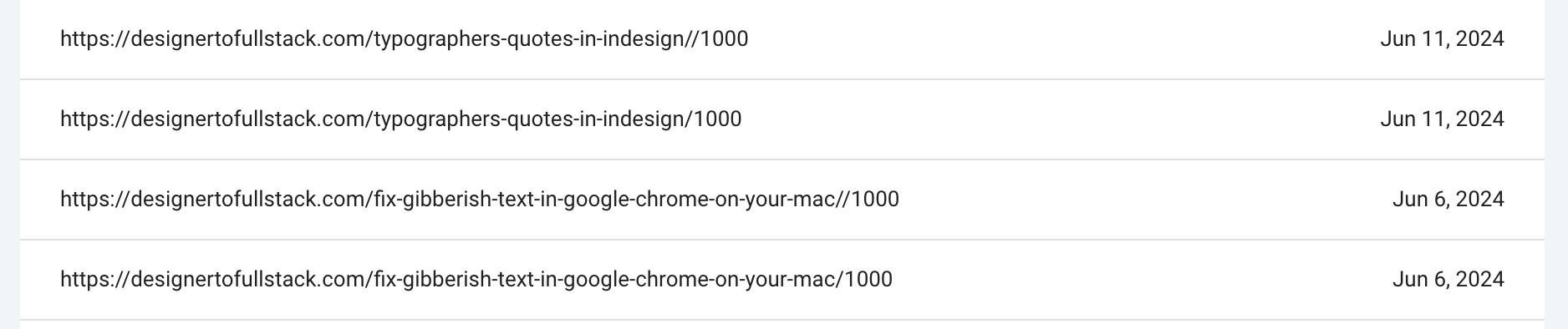

Recently, one of my sites started showing more than twenty “Pages with Redirects” in Google Search Console that all included accurate blog page URLS, but ended with /1000. Here’s the Spammy links I found, the research. and fix that I implemented.

/1000 URL Research

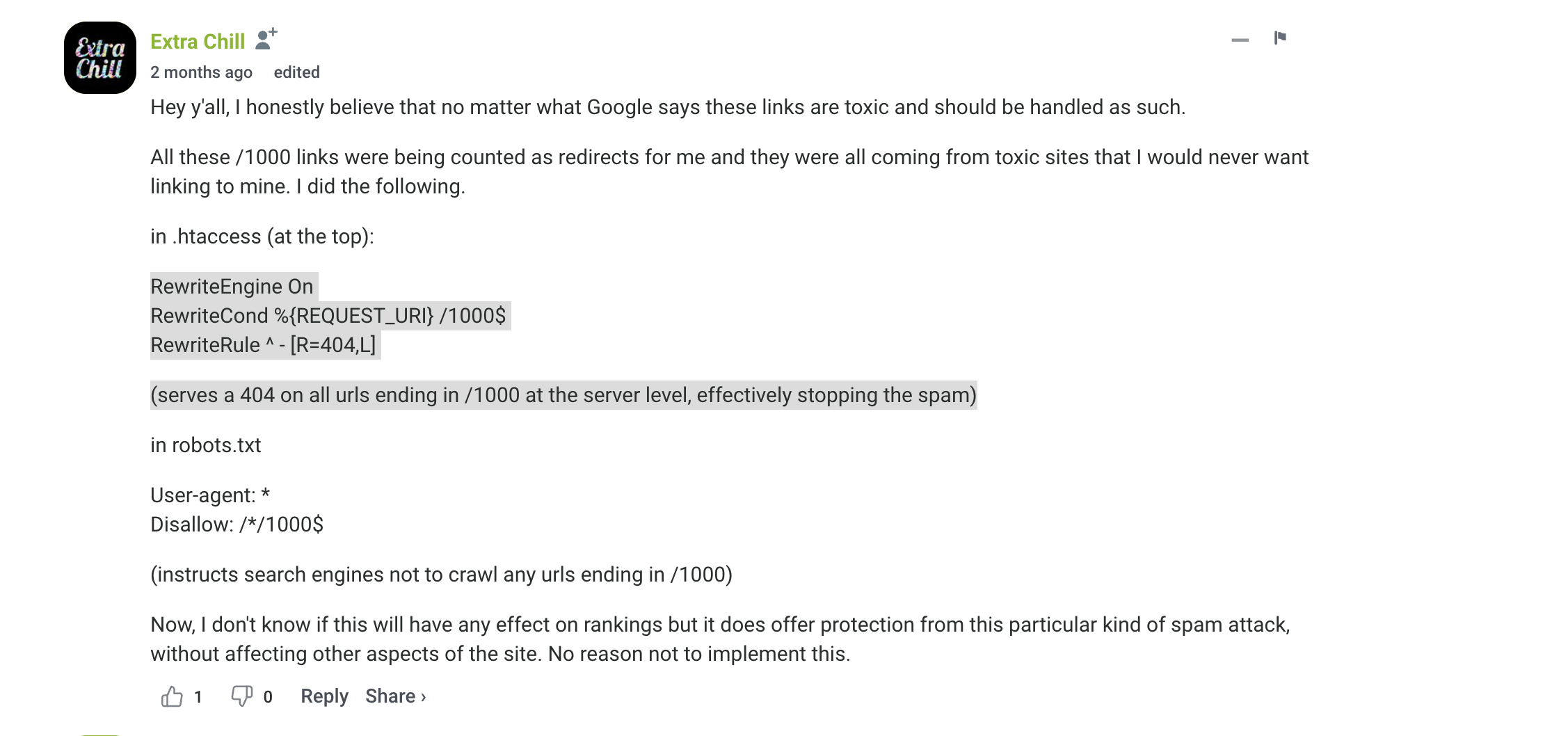

In my research to fix this error, I found mixed opinions on whether these URLs are harming my site’s SEO, but I decided to fix the issue at the server level, because as Extra Chill on seroundtable.com’s forum wrote, “Hey y’all, I honestly believe that no matter what Google says these links are toxic and should be handled as such. All these /1000 links were being counted as redirects for me and they were all coming from toxic sites that I would never want linking to mine.“

Here is Extra Chill’s suggested fix that I implemented.

Block Search Engines from Crawling URLS ending in /1000

First, use your robots.txt file to block search engines from crawling any URLS ending in /1000. The syntax is a Disallow line of code.

Disallow: /*/1000$

Where do I find my robots.txt?

Your robots.txt exists at the root of your website: example.com/robots.txt

Where to go to edit it varies based on your hosting and type of website. For a WordPress website with Yoast SEO installed, the robots.txt editing area is Yoast SEO > Tools > File Editor. You may need to generate your robots.txt. Here’s the Yoast resource that explains how to generate your robots.txt file.

If your robots.txt already has the line

Disallow:

you will replace it with Disallow: /*/1000$

Make these spammy links 404

The next step uses your .htaccess file to make these spammy links resolve to a 404 error rather than 301 redirecting. Note, within your htaccess file you only need “RewriteEngine On” once. This snippet of code at the server level, serves a 404 error to any URL ending in /1000.

# Serves a 404 error to any URL ending in /1000

RewriteEngine On

RewriteCond %{REQUEST_URI} /1000$

RewriteRule ^ - [R=404,L]Fixes Spam Traffic

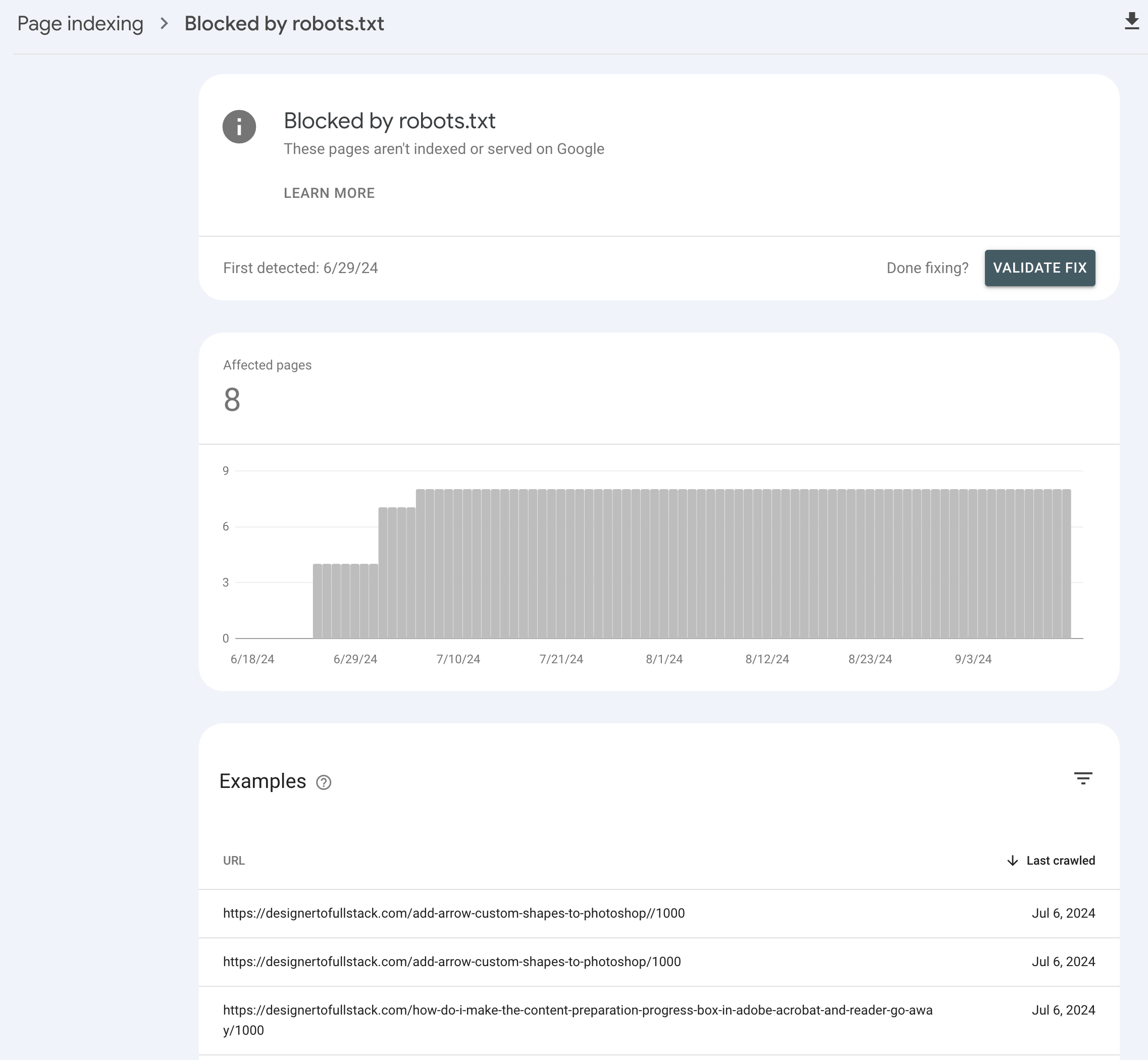

This two-step fix stops the Spam traffic generated by URLs ending with /1000 or //1000 resulting in the following view in Google Search Console. You can see that my list of 8 pages with /1000 at the end of the URL are blocked by my robots.txt.

Happy Optimizing!

Want to better understand domain redirects? Check out our resource that dives into the basics of domains and subdomains and why it’s important to have redirects established for both www and non-www versions of your website.

Hi Kelly

Thank you for the instructions I gave the same problem. However I think this isn’t a good fix because spammers can simply change the number at the end of the URL and cause the same duplicate content on your website.

The core of the problem is that WordPress tried to fix the URL. Instead it should 404 all unknown URLs but I haven’t found a reliable way to do it yet

Thank you for sharing your thoughts. If helpful for others, I can share that this was a one-time Spam issue that has never happened again to any of my sites.